Runtime objects in Unity3D

Unity3D can work with meshes created in external 3D editors, but geometry can also be created with code. And not only geometry, but materials and textures as well.

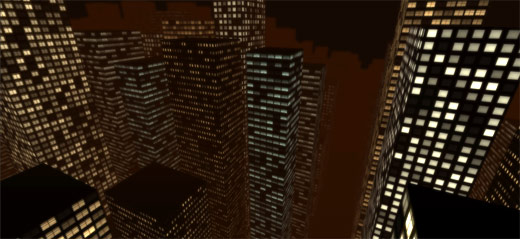

To test all these options I created a simple cityscape. I got inspired by this set of awesome pictures and also by a project called Pixel City by Shamus Young.

Creating meshes

All information about the geometry of an object is stored in the Mesh object inside the MeshFilter component. A mesh is composed of an array of vertices grouped into triangles and with UV information added to it. It's a pretty common way to store information on 3d geometry, similar to what you can find in Actionscript - Graphics.drawTriangles expects arguments in the same data format.

Many file formats out there store meshes that way too, ex. Wavefront OBJ (which makes writing importers/exporters relatively easy by the way).

Creating a mesh with code means populating the arrays of vertices, triangles and UVs. While it is pretty straightforward, even building a simple cube can be tricky and the code quickly becomes a unmanageable list of numbers. In the source code, the buildings are generated in the BuildingBuilder.cs file, so you can see it for yourselves.

Creating textures

We've already seen that any bitmap you import into a Unity3D project is represented by the class Texture2D.

Of course, bitmaps don't have to be imported - they can be created from scratch. The constructor of the Texture2D class take a few arguments, including the width and height of the image.

Once a new texture is created all it's pixels are empty (i.e. black and transparent). Their values can be changed either on a pixel-per-pixel basis using the SetPixel method or by copying portions of other bitmaps using SetPixels.

In the above example I have 6 predefined textures with different types of windows, so I don't create those from scratch. However I use a procedural texture for the illumination maps. I generate a 64×64 bitmap where the value of each pixel determines the brightness of a single window (i.e. how much light it emits). It's pretty basic: after the Texture2D is created I just run through all it's pixels and assign a random value to it's alpha component.

Creating materials

There are 6 different textures representing a different type of window each and one illumination map. How to put these together? That's what materials are used for. As with any other element, materials are represented by the Material object in scripts, and they can be created with code. The constructor takes only one argument: a shader.

Shaders are one of the most crucial elements of Unity3D (and any other 3D rendering engine for that matter). They describe how a 3D geometry will be rendered, what colors and/or textures will be used and how it will interact with light. For the buildings I use a shader called Self-Illuminated Diffuse. It's one of Unity3D built-in shaders.

Shaders usually need some parameters to work with – like colors or textures. A material is essentially a shader and a number of objects that define it's parameters. Self-Illuminated shaders expect one texture for the color and the other that determines the amount of light emitted by this texture. It's great for creating objects that emit light like bulbs, neon signs or… windows in a night scenery (it's important to understand however that the object emits light but this light doesn't illuminate any other objects around it).

I use the window image as the color texture, and the procedural image with random alpha values as the illumination map texture. The trick is that I set the scale of the color texture to 64. This way the window is repeated 64×64 times on the material and each pixel of the procedural illumination map (scaled 1:1) corresponds to one single window. I also set the filtering mode of the illumination map texture to Point, so that it doesn't get blurred when seen form a bigger distance.

A word on optimization

The generated buildings have different width, height and depth. A natural solution to apply textures on all of them was to create a material that repeats the windows the appropriate amount of times for each building. Ex. a 4 story building with 3 windows in a row would have a material where the x-scale of the texture is set to 3 and y-scale to 4. The emission map scale needs to be divided by 64 in this case, giving values of 3/64 and 4/64 respectively.

While this seems quite ok, it poses an optimization problem. Unity3D is much more effective when there's a lower amount of meshes composed of large amount of vertices, rather that the other way around – lots of meshes with few vertices. One building has 20 vertices and I create 2500 separate meshes! It's far from optimal, and you can really feel the framerate going down because of that. Fortunately there is a built-in script called CombineChildren that automatically combines all the buildings into one huge mesh making everything work much faster and smoother. But (of course) there is a trick!

Unity3D will combine objects only if they share the same, exact material. The scale of a texture on a material is unique, so if there are two materials that are identical and the only difference is the scale of the texture, we still need 2 different materials. In the above scenario almost each building uses a different material, unless it has exactly the same number of stories and the same number of windows in a row. I had to come up with a way to use only one material, and to deal with texture coordinates in a different way.

The answer is to use UVs instead of the texture scaling. So I started by creating only one material, which contains 64×64 windows. If a building is ex. 20 stories high and has 6 windows in a row, this means that the wall of the building needs to be mapped to the texture with this coordinates [0, 0, 6/64, 20/64]. Remember that UV values are normalized, i.e have values between 0 and 1, 0 pointing to the left/top pixel of the texture, and 1 to the right/bottom.

This way I can reuse the same material on every building regardless of it's size. It works even on buildings that are higher that 64 stories, because UV values are repeated – ex. an UV value of 1.2 is the same as 0.2. Perfect!

One little problem still persist though. Since each building uses the same material it also has the same pattern of lit and unlit windows. It doesn't look nice. Fortunately there was an easy solution for this: to offset the UVs of each building by a random value multiplied by 1/64. That way each building is mapped to a different area of the of the material and things look more natural.

Effects

To make the cityscape more interesting I added a Glow image effect to the camera. It adds a subtle glow around lit areas of the scene – in this case the windows, giving it a slightly blurry look.

Source code

You can grab the package with the source code here.